In order to understand the new way of searching online, you must first understand what an algorithm is and why it's time to focus on original content.

Algorithm optimization for content ranking has become increasingly important as the internet continues to expand and search engines strive to keep up.

As the technology behind search engines evolves, optimization of algorithms can help maximize the relevance of content returned in search engine results.

Optimizing algorithms will not only improve the user experience on Google, Bing, and other major search engines but also provide a better chance of improving overall rankings.

In order to optimize algorithms, one must understand the fundamentals of how a search engine works. It all starts with crawling and indexing.

A crawler is a program that goes through web pages and identifies new or updated content to index in web databases. Once these pages are indexed by the crawlers, they are stored in a database for easy lookup by users when they perform searches.

The next step is announcing newly indexed web pages to online users via ranking. This is what allows people to find specific web pages when they use keywords in their searches.

The higher up a webpage appears in search results, the more likely it will be accessed by an interested party. Ranking is accomplished by taking into consideration the relevance of page content as well as its popularity within given parameters.

The process known as algorithm optimization helps determine which websites rank higher among online searches than others.

Algorithms used in optimizing rankings take into account various factors such as website structure, keyword density, link relevancy, and social media presence all helping decide where sites should end up placing on SERPs (Search Engine Results Pages).

Algorithm optimization techniques have evolved over time from simple tweaks to complex machine learning models that can automatically analyze millions of web pages and produce accurate forecasts with reduced human input.

To make sure new content ranks well on SERPs; Search Engines have implemented penalty systems that penalize websites using spammy tactics or “black-hat” SEO methods meaning that any attempts of manipulation aimed at cheating users with low-quality information or over-optimizing techniques will be severely punished through exclusion from SERPs.

To ensure good content rises in rank due diligence should always be practiced when conducting SEO activities.

Optimization strategies have been developed specifically for differentiating between high-quality websites & content versus low-quality ones ensuring only useful data is delivered to searchers regardless if it’s provided by humans or machines.

One strategy specifically dedicated to optimizing content for search engine ranking is called Latent Semantic Indexing (LSI). Its primary purpose is identifying synonyms related to key phrases and words used within an article allowing Google bots to understand how those words connect with each other thus determining whether there’s semantic value within them – something important when considering how helpful this information might be for readers searching for specific answers.

Another popular algorithm utilized today comes from Artificial Intelligence (AI). AI technologies such as Natural Language Processing (NLP) assess accuracy levels between multiple texts allowing bots to compare them against established benchmarks before assigning rankings accordingly depending on accuracy level outcomes.

Now the juice!

What is Optimizing for Algorithms Really?

I read a piece from DON REISINGER Named "SEO is Dead. Long Live AO" That inspired me to further elaborate on this. To be clear as a marketer, the threat is real to businesses that depend on SEO to drive traffic.

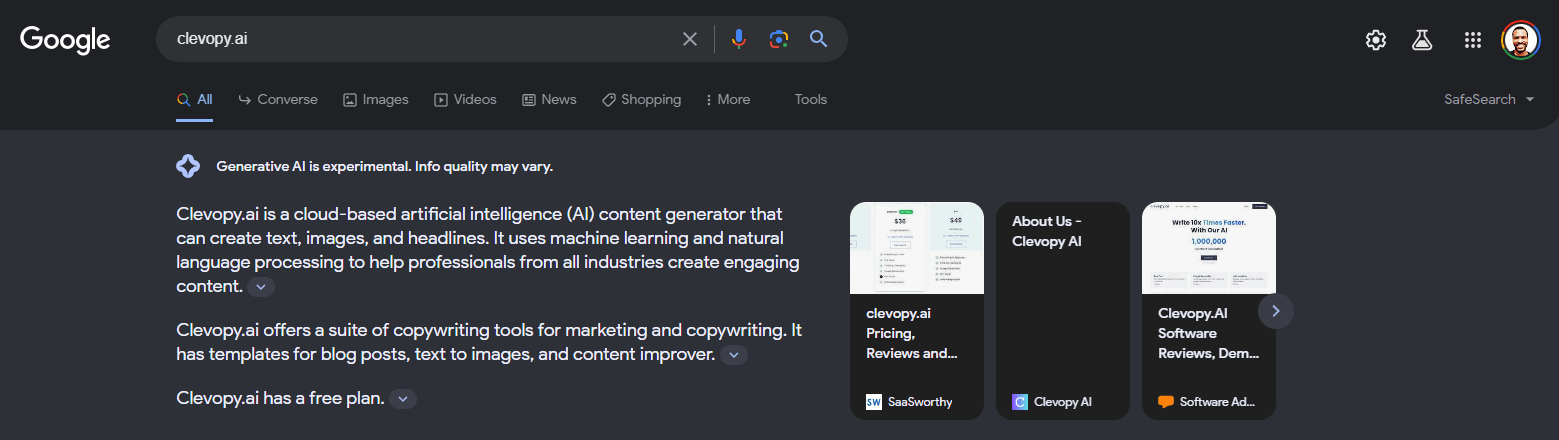

As you can see, the results are spot on and also can be revamped depending on the search terms you use.

Reference

https://www.inc.com/don-reisinger/seo-is-dead-long-live-ao.html